Nvidia’s rivals and biggest customers are rallying behind an OpenAI-led initiative to build software that would make it easier for artificial intelligence developers to move away from their chips.

Silicon Valley-based Nvidia has become the world’s most valuable chipmaker thanks to a near monopoly on the chips needed to create large AI systems, but supply shortages and high prices are forcing customers to look for alternatives.

However, creating new AI chips only solves part of the problem. While Nvidia is best known for its powerful processors, industry figures say the “secret sauce” is its Cuda software platform, which allows chips originally designed for graphics purposes to accelerate AI applications.

At a time when Nvidia is investing heavily to expand its software platform, rivals like Intel, AMD and Qualcomm are targeting Cuda in hopes of luring customers away – with the eager support of some of Silicon Valley’s biggest companies.

Engineers from Meta, Microsoft and Google are helping develop Triton, software to run code efficiently on a wide range of AI chips, which OpenAI released in 2021.

Even as they continue to spend billions of dollars on their latest products, major tech companies are hoping that Triton will help break the stranglehold that Nvidia has on AI hardware.

“Essentially, it breaks the Cuda lock-in,” said Greg Lavender, Intel’s chief technology officer.

Nvidia dominates the market for building and deploying large language models, including the system behind OpenAI’s ChatGPT. That has pushed the valuation to more than $2 trillion, leaving rivals Intel and AMD scrambling to catch up. Analysts expect Nvidia to report this week that its latest quarterly revenue more than tripled year over year, with profits up more than six times.

But Nvidia’s hardware has only become so popular thanks to the accompanying software it has developed over nearly two decades, creating a formidable “moat” that competitors have struggled to overcome.

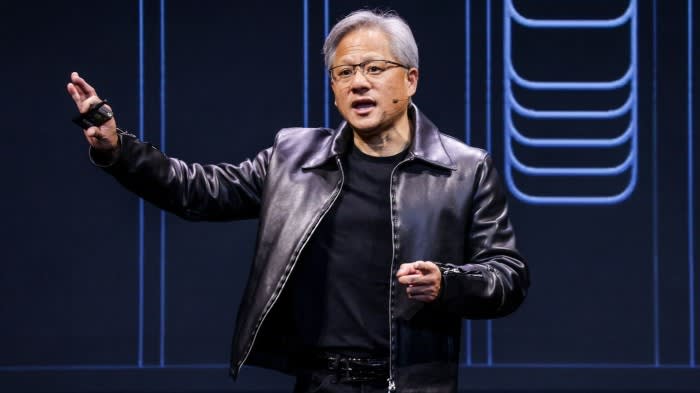

“What Nvidia does for a living is not that [just] build the chip: we build an entire supercomputer, from the chip to the system and the interconnections. . . but very importantly the software,” said CEO Jensen Huang at the GPU Technology Conference in March. He has described Nvidia’s software as the “operating system” of AI.

Nvidia was founded more than 30 years ago to target video gamers and was made possible by the Cuda software, which the company created in 2006 to run general purpose applications on its graphics processing units.

Since then, Nvidia has invested billions of dollars to build hundreds of software tools and services to make running AI applications on its GPUs faster and easier. Nvidia executives say it now employs twice as many software engineers as hardware staff.

“I think people underestimate what Nvidia has actually built,” said David Katz, a partner at Radical Ventures, an AI-focused investor.

“They have built an ecosystem of software around their products that is efficient, easy to use and really works – and makes very complex things simple,” he added. “It’s something that has developed over a very long time within a huge community of users.”

Nevertheless, the high price of Nvidia’s products and the long queue to buy the most advanced equipment, such as the H100 and the upcoming GB200 “superchip”, have prompted some of its largest customers (including Microsoft, Amazon and Meta) to seek alternatives . or develop yourself.

However, because most AI systems and applications already run on Nvidia’s Cuda software, it is time-consuming and risky for developers to rewrite them for other processors, such as AMD’s MI300, Intel’s Gaudi 3 or Amazon’s Trainium.

“The point is that if you want to compete with Nvidia in this space, you not only have to build hardware that is competitive, you also have to make it easy to use,” said Gennady Pekhimenko, CEO of CentML, a startup. who creates software to optimize AI tasks, and an associate professor of computer science at the University of Toronto. “Nvidia’s chips are really good, but in my opinion their biggest advantage is on the software side.”

Rivals like Google’s TPU AI chips may offer similar performance in benchmark tests, but “convenience and software support make a big difference” in Nvidia’s favor, Pekhimenko said.

Nvidia executives claim the software work makes it possible to deploy a new AI model on the latest chips in seconds and provides continuous efficiency improvements. But those benefits come with a catch.

“We see a lot of Cuda lock-in in the [AI] ecosystem, which makes it very difficult to use non-Nvidia hardware,” said Meryem Arik, co-founder of TitanML, a London-based AI start-up. TitanML started using Cuda, but last year’s GPU shortages prompted the company to rewrite its products in Triton. Arik said this helped TitanML win new customers who wanted to avoid what she called the “Cuda tax.”

Triton, whose co-creator Philippe Tillet was hired by OpenAI in 2019, is open source, meaning anyone can view, modify or improve its code. Proponents argue that this gives Triton inherent appeal to developers over Nvidia-owned Cuda. Triton first started working only with Nvidia’s GPUs, but now supports AMD’s MI300, with support for Intel’s Gaudi and other accelerator chips planned soon.

Meta, for example, has put Triton software at the heart of its internally developed AI chip, MTIA. When Meta released the second-generation MTIA last month, engineers said Triton was “highly efficient” and “sufficiently hardware agnostic” to work with a range of chip architectures.

Developers from OpenAI rivals like Anthropic – and even Nvidia itself – have also been working to improve Triton, according to logs on GitHub and conversations about the toolkit.

Triton isn’t the only attempt to challenge Nvidia’s software advantage. Intel, Google, Arm and Qualcomm are among the members of the UXL Foundation, an industry alliance developing a Cuda alternative based on Intel’s open-source OneAPI platform.

Chris Lattner, a prominent former senior engineer at Apple, Tesla and Google, has launched Mojo, a programming language for AI developers whose pitch includes: “No Cuda required.” Only a small minority of the world’s software developers know how to code with Cuda, and it’s difficult to learn, he argues. With his start-up Modular, Lattner hopes Mojo will make it “dramatically easier” to build AI for “all types of developers – not just the elite experts at the largest AI companies”.

“Today’s AI software is built using software languages from over fifteen years ago, similar to using a BlackBerry today,” he said.

Even if Triton or Mojo are competitive, it will take Nvidia’s rivals years to catch up to Cuda’s lead. Analysts at Citi recently estimated that Nvidia’s share of the generative AI chip market will decline from about 81 percent next year to about 63 percent in 2030, suggesting that Nvidia will remain dominant for many years to come.

“Building a competitive chip against Nvidia is a hard problem, but an easier problem than building the entire software stack and letting people use it,” Pekhimenko said.

Intel’s Lavender remains optimistic. “The software ecosystem will evolve,” he said. “I think it will level the playing field.”

Additional reporting by Camilla Hodgson in London and Michael Acton in San Francisco