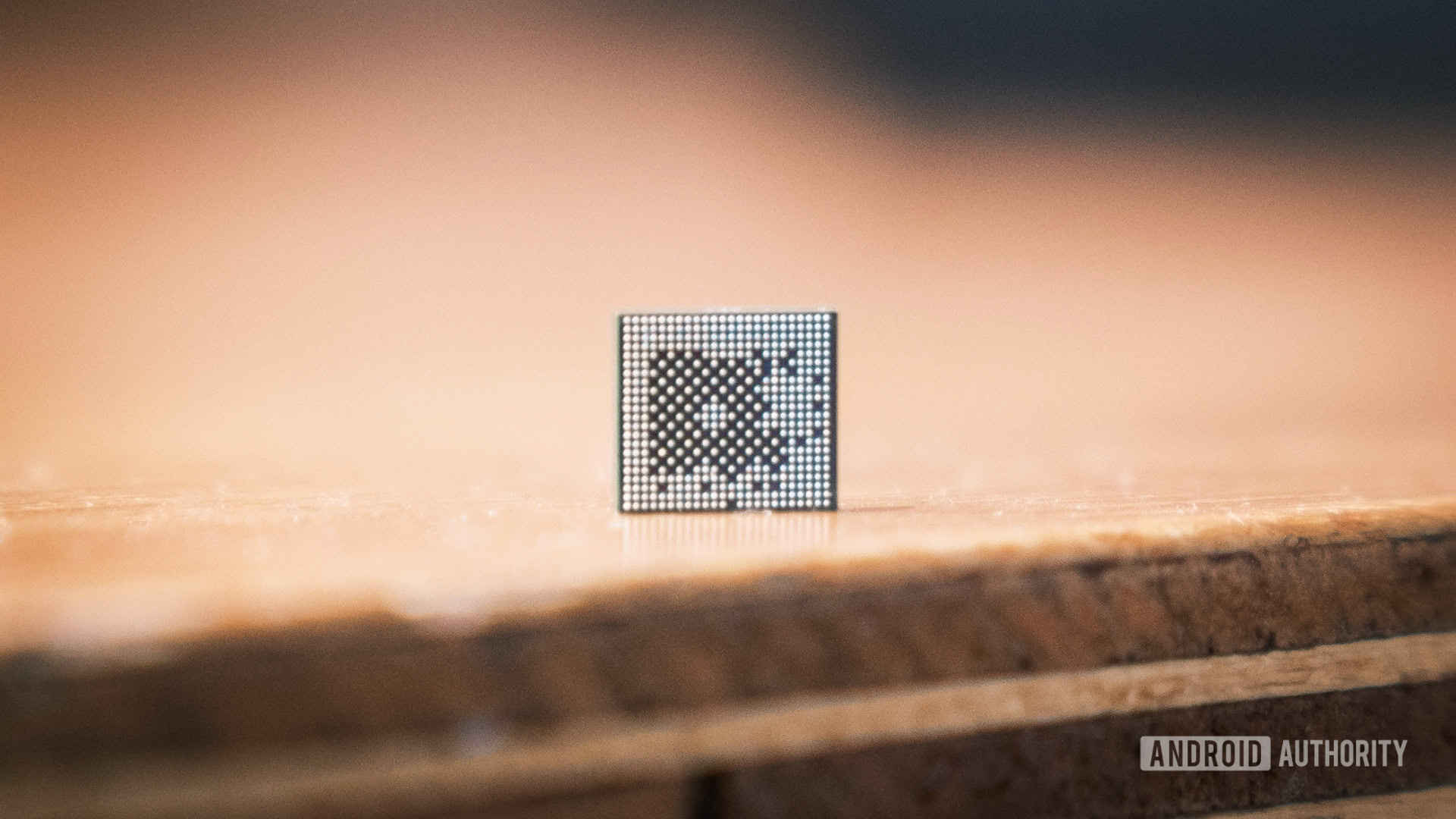

Robert Triggs / Android Authority

If you’ve been considering the purchase of a new laptop, you’ll no doubt have noticed that they’re increasingly featuring NPU capabilities that look very much like the hardware we’ve seen in the very best smartphones for a number of years. . The driving factor is laptops’ drive to catch up with mobile AI capabilities, equipping them with advanced AI features, such as Microsoft’s Copilot, that can run securely on the device without the need for an internet connection. So here’s everything you need to know about NPUs, why your next laptop might have one, and whether or not you should buy one.

Are you interested in the AI capabilities of laptops?

782 votes

What is an NPU?

NPU is an acronym for Neural Processing Unit. NPUs are intended to perform mathematical functions related to neural networks/machine learning/AI tasks. While these can be standalone chips, they are increasingly being integrated directly into a system-on-chip (SoC), alongside more familiar CPU and GPU components.

NPUs are dedicated to accelerating machine learning, or AI, tasks.

NPUs come in different shapes and sizes and are often called something different depending on the chip designer. You can already find different models spread across the smartphone landscape. Qualcomm has Hexagon in its Snapdragon processors, Google has its TPUs for both the cloud and its mobile Tensor chips, and Samsung has its own implementation for Exynos.

The idea is now also gaining ground in the field of laptops and PCs. For example, there’s the Neural Engine in the latest Apple M4, Qualcomm’s Hexagon features on the Snapdragon X Elite platform, and AMD and Intel have started integrating NPUs into their latest chipsets. While not quite the same, the boundaries of NVIDIA’s GPUs are blurring given their impressive computing capabilities. NPUs are increasingly ubiquitous.

Why do gadgets need an NPU?

Robert Triggs / Android Authority

As we mentioned, NPUs are purpose-built to handle the workload of machine learning (along with other math-heavy tasks). In layman’s terms, an NPU is a very useful, perhaps even essential, component for running AI on-device rather than in the cloud. As you’ve no doubt noticed, AI seems to be everywhere these days, and incorporating support directly into products is an important step in that journey.

Much of today’s AI processing takes place in the cloud, but this is not ideal for several reasons. First, there are the latency and network requirements; you may not be able to access tools when offline or may have to wait for long processing times during peak hours. Sending data over the internet is also less secure, which is a very important factor when using AI that has access to your personal data, such as Microsoft’s Recall.

Simply put, running on the device is preferable. However, AI tasks are very computationally intensive and do not work well on traditional hardware. You may have noticed this if you’ve tried generating images via Stable Diffusion on your laptop. It can be painfully slow for more advanced tasks, although CPUs can handle some “simpler” AI tasks just fine.

NPUs allow AI tasks to be performed on the device, without the need for an internet connection.

The solution is to use special hardware to speed up these advanced tasks. You can read more about what NPUs do later in this article, but the TLDR is that they perform AI tasks faster and more efficiently than your CPU can do on its own. Their performance is often measured in trillions of operations per second (TOPS), but this isn’t a hugely useful metric because it doesn’t tell you exactly what each operation does. Instead, it’s often better to look for numbers that tell you how quickly it takes to process tokens for large models.

Speaking of TOPS, NPUs for smartphones and early laptops are rated in the dozens of TOPS. Broadly speaking, this means they can speed up basic AI tasks such as camera object detection to apply bokeh blur or summarize text. If you want to use a large language model or use generative AI to produce media quickly, you will need a more powerful accelerator/GPU in the hundreds or thousands TOPS range.

Is an NPU different from a CPU?

A neural processing unit is quite different from a central processing unit because of the type of workload it is designed for. A typical CPU in your laptop or smartphone is quite generally intended to cater to a wide range of applications, supporting wide instruction sets (functions it can perform), various ways of caching and recalling functions (to speed up repetitive loops ), and large ones outside the proper execution windows (so they can keep doing things instead of waiting).

However, machine learning workloads are different and don’t require as much flexibility. For starters, they are much more math-intensive and often require repetitive math-intensive instructions such as matrix multiplication and very fast access to large memory pools. They also often work with unusual data formats, such as sixteen-, eight-, or even four-bit integers. By comparison, your typical CPU is built around 64-bit integers and floating point arithmetic (often with extra instructions added).

An NPU is faster and more energy efficient when performing AI tasks compared to a CPU.

Building an NPU specifically for massively parallel computing of these specific functions results in faster performance and less power wasted on idle functions that are not useful for the task at hand. However, not all NPUs are equal. Even beyond their number processing capabilities, they can be built to support different types of integers and operations, meaning some NPUs are better at working with certain models. For example, some smartphone NPUs run on INT8 or even INT4 formats to save on power consumption, but you’ll get better accuracy with a more advanced but power-hungry FP16 model. If you really need advanced computing power, dedicated GPUs and external accelerators are still more powerful and diverse in formats than integrated NPUs.

As a backup, CPUs can perform machine learning tasks, but these are often much slower. Modern CPUs from Arm, Apple, Intel and AMD support the necessary mathematical instructions and some smaller levels of quantization. Their bottleneck is often how many of these functions they can perform in parallel and how quickly they can move data in and out of memory, which is what NPUs are specifically designed to do.

Should I buy a laptop with an NPU?

Robert Triggs / Android Authority

While far from essential, especially if you don’t care about the AI trend, NPUs are required for some of the latest features you’ll find in mobile and PC.

For example, Microsoft’s Copilot Plus specifies an NPU with 40TOPS of performance as the minimum requirement you need to use Windows Recall. Unfortunately, Intel’s Meteor Lake and AMD’s Ryzen 8000 chips found in current laptops (at the time of writing) don’t meet that requirement. However, AMD’s newly announced Stix Point Ryzen chips are compatible. You won’t have to wait long for an x64 alternative to Arm-based Snapdragon X Elite laptops, as Stix Point-powered laptops are expected in the first half of 2024.

Popular PC-class tools like Audacity, DaVinci Resolve, Zoom, and many others are increasingly experimenting with more demanding on-device AI capabilities. While not essential for core workloads, these features are becoming increasingly popular and AI capabilities should factor into your next purchase if you use these tools regularly.

CoPilot Plus is only supported on laptops with a sufficiently powerful NPU.

When it comes to smartphones, the features and capabilities vary slightly from brand to brand. For example, Samsung’s Galaxy AI only works on its powerful flagship Galaxy S handsets. It hasn’t added features like chat support or interpreter to the affordable Galaxy A55, probably because it doesn’t have the necessary processing power. That said, some of Samsung’s features also run in the cloud, but these aren’t likely to be funded by cheaper purchases. Speaking of which, Google is just as good when it comes to feature consistency. You’ll find Google’s very best AI extras on the Pixel 8 Pro, like Video Boost. Still, the Pixel 8 and even the affordable 8a run many of the same AI tools.

Ultimately, AI is here and NPUs are the key to enjoying on-device features that can’t run on older hardware. That said, we’re still in the early days of AI workloads, especially in the laptop space. The software requirements and hardware capabilities will only increase in the coming years. In that sense, there’s no harm in waiting for the dust to settle before jumping in.